If you want to create amazing graphics, first find something that inspires you. You’ll need that motivation to push through the challenges you’ll face.

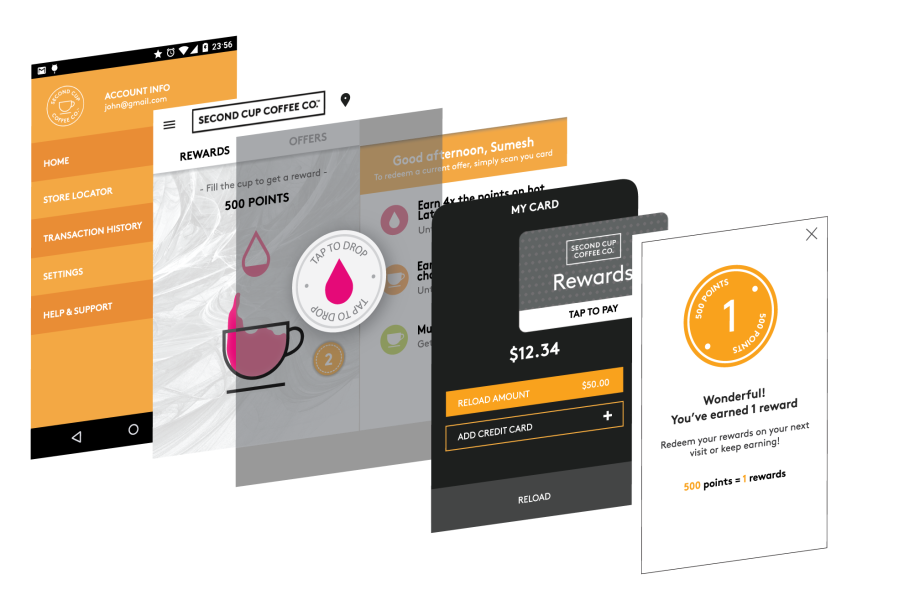

For the Second Cup project, we found something that inspired us: an interactive splash feature, a liquid simulation in a cup.

It’s vital to understand the details of the Android graphics pipeline, from application code to display, if you want to create innovative graphics that’ll engage your audience.

Keeping it Lightweight

Android’s Material design represents views as sheets of lightweight paper. With graphics-intensive apps (like Second Cup), your primary challenge is keeping the user experience smooth.

As the graphical complexity of an app increases, people naturally expect it to slow down or drag. But an app that feels lightweight, despite doing something extraordinary, creates a powerful effect on users.

So let’s start at the first part of the graphics pipeline: the UI thread.

UI Thread

The UI thread is like an assembly line, running tasks in a sequence, one at a time. Any code that changes the views in your UI must run on the UI thread. Common examples include, textView.setText(), view.setBackground(), etc; any change that has a visible effect on the views.

You add your own code to the UI thread by overriding or extending lifecycle methods like onCreate(), onPause(), etc. Since all of these get run on the UI thread, any code you add in your overridden method also gets called on the UI thread. Other code that gets run on the UI thread include UI events such as onClick() or onTouch().

You can also add your own custom code to the UI thread anytime you want by posting a Runnable or a message to the UI thread. The most common way to do this is to post a Runnable to a view:

myView.post(new Runnable() {

public void run() {

// Code to run

}

});You can also post to the UI thread through the activity,

myActivity.runOnUiThread(new Runnable() {

public void run() {

// Code to run

}

});

but keep in mind the two are not the same. Posting through the view will add the runnable to the end of the queue. But posting to the activity will run the code immediately.

When you want the UI to update to the screen, you add the draw() method onto the queue (indirectly).

You do this by invalidating all or part of the hierarchy. In code this means calling invalidate() on the view that contains the other views (the parent). If you have a running animation, Android automatically adds a draw() call once every frame, which is about once every 16 ms.

When the draw call comes along, it pushes all or part of your views down to the GPU to get drawn by Open GL.

You can even customize the draw call by overriding onDraw(). You would then take the Canvas that is passed in and use it to draw shapes and paths. (More on that in another article).

Changes to the UI, and the drawing of the UI have to be done one after the other, so they don’t step on each other’s toes. You don’t want to be changing a view at the same time it is being drawn. This one-after-another style of drawing is called the Single Threaded Model (because there is only one thread that does everything).

Problem 1: Blocking the thread

Unfortunately, the Single Threaded Model has some drawbacks. The first problem you might encounter happens when some of the code takes too long to run. The drawing code has to wait its turn, and so the screen doesn’t get updated regularly. This makes for a less than smooth UI.

To keep the UI updating smoothly, make sure any code you write on the UI thread is thin and lightweight. Android will be able to slip its drawing calls between the cracks. Do your changes in bits and pieces, rather than in one chunk. Or, more importantly, if your code doesn’t change anything in the UI, don’t do it on the UI thread at all! Create a background thread and do it there.

Animation

Keeping the UI thread clear is especially important when you need to animate something at a smooth frame rate. Creating and running an animation sets up a series of draw calls at certain intervals, and none of these draws should be blocked.

Creating smooth animations is also important to create that flawless look. We discovered that if you want a smooth transition between two animations, you should set them both up in an AnimatorSet instead of waiting for one to finish before starting the other.

When we wanted to animate the card flipping over to show the backside, we wanted a seamless animation, so that it would look like one card with two sides, even though they were two different views.

AnimatorSet flipSet = new AnimatorSet(); //Show Front Animation ObjectAnimator cardFrontAnimation = ObjectAnimator.ofFloat(cardFront, "rotationY", 0f); ... //Hide back Animation ObjectAnimator cardBackAnimation = ObjectAnimator.ofFloat(cardBack, "rotationY", 90f); ... flipSet.playTogether(cardBackAnimation, cardFrontAnimation); flipSet.start();

Doing this prevents a noticeable jitter between the front animation and the back one.

Problem 2: Keeping draw() short

The second problem you might run into is that the draw() call may take too long to execute. When it goes through the hierarchy of views you’ve created, the more views it has to draw, the longer it takes to finally finish drawing the whole thing.

The Second Cup Rewards app got pretty heavy pretty fast. Most of the important features were on the main page, and this included the liquid physics effect. The designers wanted everything important to be accessible from the main page, and left very little to the side menu.

If you want your drawing to run smoothly, you have to be constantly “flattening” the layers. This means finding ways to reduce the number of views and layers that have to be drawn in order to show everything on the screen. Flattening view layers is the one place code optimization really pays off.

To optimize your app, I recommend using overdraw tool in developer options. The overdraw tool tells you how many times some region of your UI is being drawn. It colours that region, and the colour tells you the number of times of times it is being drawn. Transparent means once, blue means twice, green means three times, then pink. After red, it stops counting.

Using overdraw helped us discover one of our problems pretty fast. When we turned it on, most of our apps was bright red.

We made this app before Material AppCompat libraries were introduced. We had a slide up panel that contained the digital card, and we wanted to create a drop shadow effect under it. We tried to approximate a drop shadow by creating a layer list, and using transparent grey shapes. But we hadn’t realized that each layer in a layer list counts as an extra draw.

We shifted to using an image (a 9-patch), and the result was significantly better.

There are many techniques to reduce overdraw. For instance, instead of setting a background colour on your layout (android:background), set the windowBackground colour in the theme. This will save you one whole overdraw.

<style name="AppTheme" parent="android:Theme.Light">

<item name="android:background">@color/white_opaque</item>

<item name="android:windowBackground">@color/my_colour</item>

<item name="android:colorBackground">@color/my_colour</item>

</style>Obsessively monitoring overdraw is essential in any graphics intensive app.

You should also test regularly on older phones. Older phones act as canaries in the coal mine. They more noticeably announce any regions of lag in your app, which may be hidden by newer, faster phones.

Seamless

In many ways, graphics is about creating illusions. With the Second Cup app, we were trying to create the appearance of liquid ink flowing over the paper of the app. It’s a magic trick.

But if you want to maintain an illusion, you can’t let the seams show.

When wanted to add liquid effects to our app, we started by copying over an open source app called Liquid Fun Paint. If you ever want to just play around with Liquid Fun, and see what it can do, you can download this app from the Play store for free.

Breaking apart their code and copying it over was the best place to start implementing liquid effects.

LiquidFun Paint draws the liquid effect to an Android UI element called GLSurfaceView. GLSurfaceView is just a “sheet” you can draw graphics onto using Open GL. It’s a convenience class, build on top of a regular SurfaceView, that sets up Open GL for you, and then runs it smoothly. In most cases this works great, and I recommend using it if you want high-efficiency Open GL graphics. It had one or two downsides, though.

When we loaded the main screen, the liquid display part of the app (the GLSurfaceView) was black, for up to a second. In addition, when we dragged the drawer left and right, it looked as if the liquid display was lagging behind, and black gaps started to appear between the GLSurfaceView and the rest of the app.

You may have noticed this yourself anytime you used SurfaceView to play videos, or if you tried showing the camera viewfinder in your app.

To understand why this happened you have to understand the second part of the graphics pipeline:

SurfaceFlinger

This is SurfaceFlinger.

It gathers together all the UI elements (Surfaces) from various parts of Android, and assembles them together for the final display. A “Surface” is just an image buffer that you can draw part of your UI onto, which then gets passed around the Android system.

Your app creates one of these Surfaces, then sends it to SurfaceFlinger. Anything you want to show up in your app has to go through SurfaceFlinger. The other Surfaces you see in the diagram above are the navigation bar and the system bar, and they’re created by the Android Operating System.

SurfaceFlinger can refresh the screen up to 60 times a second, so your app needs to keep up with that for the smoothest possible experience.

The entire UI thread we saw earlier happens in the application process in the top left. Your app uses draw calls to draw to its own Surface, then sends it to be displayed.

SurfaceView

So how does GLSurfaceView fit into this?

SurfaceView, and also GLSurfaceView, break this pattern. SurfaceView is actually a window through your app’s Surface to another Surface behind it.

So why would you use this? The Surface behind SurfaceView can run on a separate thread, or even in a separate process. It’s useful when you want to unload a heavy graphics processing off the UI thread. Common uses include video playback, the camera viewfinder, and 3D games.

This also implies that not everything that shows up on the screen has to happen on the UI thread. In fact, Open GL rendering can happen on any thread. Only changes that affect views have to happen in the Single Threaded Model.

The Surface behind the SurfaceView gets pulled into SurfaceFlinger in the same way your app’s Surface does. Here the Surfaces are stitched together.

Unfortunately, they are not necessarily in sync. Because they run on separate processes, their timing is not perfectly matched. The Open GL Surface may take longer to load than your app, so the “window” (view) will show up black until the Open GL Surface is ready.

Furthermore, if you move the “window”, the Surface behind it will try to keep up, but it will usually lag behind. The only way you can perfectly synchronize any two views is if they are drawn on the same Surface.

So how can you synchronize these two Surfaces if you want the liquid effect to still render on a separate process?

TextureView

TextureView reverses this whole system. TextureView allows your apps UI thread to include the output from another Surface right into the other views. In this way, it acts just like SurfaceFlinger, taking in Surfaces and stitching them together. Just as SurfaceView was a “View to a Surface”, TextureView is a “View to a Texture”.

It passes the completed drawing to your UI thread as a SurfaceTexture. A SurfaceTexture is a combination of a Surface and a Texture. It is a Surface because that is what Android would normally draw to, and it is a Texture because that is the kind of image buffer that Open GL uses. It is the Open GL equivalent of what “Surface” is to Android.

Since your UI is drawn in the GPU using Open GL, a Texture feels right at home when it comes time to draw your views.

To collect that output, you have to implement TextureView.SurfaceTextureListener. Every time a frame is ready, the thread that is drawing the SurfaceTexture runs that implementation on the UI thread, passing you the Texture along with it. This lets you to take up the Texture into your UI thread. Once it’s incorporated into the UI views, it gets processed just like any other view.

GLTextureView

There are a lot of challenges involved in converting a GLSurfaceView into a TextureView. This is what we had to do in order to get the seamless experience we wanted. We needed to control the frame rate to be battery-efficient. Otherwise TextureView can draw over 70 frames a second, which, though amazing, is not battery-efficient.

If you don’t want to do this conversion yourself, you can use a convenience class I made, that allows you to swap out a GLSurfaceView with a TextureView. Linked here on Github. Here’s how you’d use it:

class MyGLView extends GLSurfaceView { ... }

becomes

class MyGLView extends GLTextureView { ... }

Not all the GLSurfaceView methods are accounted for yet, but the most important parts are there.

LiquidFun

You can create a sense of wonder in your users by going beyond what your users expect, even what they think is possible. This part talks about the liquid effect itself, how it’s accomplished, and how you can use it in your apps too.

When we ported the LiquidFun Paint code, this is how it looked in our app:

The liquid had translucent edges. This was the out-of the box experience.

Second Cup’s branding, however, has a cel-shaded look. It uses solid colours. In order to create that effect we had to manipulate the shaders that render the liquid to the screen.

LiquidFun and Box2D

The key to the creating the liquid effect is an open source library called LiquidFun. It is (was?) developed by Google.

LiquidFun is based off of a popular open-source 2D physics library called Box2D. It’s an extension of that library. Box2D’s, (and LiquidFun’s), physics libraries are written in C++. These libraries are packaged with the Second Cup app, and are run from the app’s Java code anytime physics calculations are needed.

JNI, SWIG

Communication from Java in and out of C++ libraries is done through the JNI (Java Native Interface). Programming for the JNI is very challenging. It is sparsely-documented and little-used.

LiquidFun uses a tool to help create a bridge between Java and C++, called SWIG. SWIG takes away a lot of the repetitive work from programming for the JNI, but it is very difficult to set up. Since we needed to alter some of the Box2D code for our project, we had to modify and re-run SWIG as well.

LiquidSurface

Fortunately, you won’t have to do any of this. I’ve open-sourced a library called LiquidSurface that takes the hard work out of using LiquidFun. It comes with the C++ libraries already pre-built, so you don’t have to run ndk-build, or set up SWIG at all.

**Currently LiquidSurface is not available through Gradle or Maven. I am having difficulty setting up my repo through BinTray. Any guidance, or pointers to helpful documentation are appreciated**

Benefits of this LiquidSurface over LiquidFun and/or LiquidFun Paint include:

- Pre-built native libraries (everything’s set up for you).

- Plug and play into any app.

- Create multiple, overlapping Particle Systems (not available in LiquidFun Paint).

- Incorporates solid objects (limited to sprites for now).

- Create more complex shapes from sets of vertices.

To use it, simply add either the TextureView version or the GLSurfaceView version of the view to your XML:

<com.mycardboarddreams.liquidsurface.LiquidSurfaceView android:id=”@+id/liquid_surface” android:layout_width=”match_parent” android:layout_height=”match_parent”/>

<com.mycardboarddreams.liquidsurface.LiquidTextureView android:id=”@+id/liquid_surface” android:layout_width=”match_parent” android:layout_height=”match_parent”/>

Then override onCreate() and add this code:

ILiquidWorld liquidWorld = (ILiquidWorld) findViewById(R.id.liquid_surface); ParticleGroup group = new ParticleGroup(MathHelper.createCircle(new Vector2f(500, 500), 400, 8)); liquidWorld.createParticles(group);

You need to add two lines to onResume() and onPause() as well. See the readme here.

Liquid Appearance

LiquidFun represents liquids as groups of particles that interact with one another. It then uses those particles’ positions to decide how to draw to the screen. When rendering, LiquidFun goes through the following steps:

Step 1: Box2D

For each step of time, Box2D calculates the position and motion of the particles in 2D.

Step2: First drawing pass

Then the first drawing pass draws small images at each particle’s location, and colours them to the liquid’s colour. The shader that does this is assets/shaders/water_particle.glslv. The extension“glslv” denotes a vertex shader, i.e. OpenGL Shader Language, Vertex.

This shader also has a property called “weight”. Each particle has its own weight, which can be used to darken the colour of the deeper parts of the liquid.

The drawing from this stage is not shown on the screen. It is drawn onto an internal, hidden buffer. Once this stage is finished, the output image is used as the input to the next stage.

Step 3: Threshold

The second stage takes the drawing from the first stage, and first blurs it. It then crops all pixels that are more transparent than a certain threshold. The line in the diagram below represents that threshold.

The shader in LiquidFun Paint that crops the edges of the liquid is assets/shaders/screen.glslf. The extension“glslf” denotes a fragment shader, i.e. OpenGL Shader Language, Fragment.

The outcome of these two stages looks like the liquid from the original LiquidFun Paint app. It has translucent edges.

In order to achieve the cel-shaded look we were going for, we needed to adjust the shader settings. These settings are conveniently located in a json file in assets. The json file contains the following:

{

"waterParticlePointSprite": {

"uDiffuseTexture": "textures/particle_blurred.png",

"particleSizeScale" : 2.5,

"weightScale": 0.05,

"weightRangeShift": 0.8,

"weightCutoff": 0.7

},

"waterParticleToScreen": {

"alphaThreshold": 0.7

},

"otherParticlePointSprite": {

"uDiffuseTexture": "textures/particle_brush.png",

"particleSizeScale" : 3.2

},

"otherParticleToScreen": {

"alphaThreshold": 0.8

},

"paper": {

"uDiffuseTexture": "textures/canvas.png"

}

}

We tweaked a few of the properties. Most importantly, we increased the threshold value (“alphaThreshold”) to cut off more of the translucent edges:

Finally, we increased the size of the particles (“particleSizeScale”) to create a fuller liquid.

In order to create a liquid-like effect, two rendering stages are necessary:

Release

On release day, the liquid worked flawlessly. Feedback about the liquid was overwhelmingly positive. People were downloading the app just to play with this innovative feature.

It was also a satisfying accomplishment for us engineers. We had been driven partly by curiosity, and partly by the love of something new and challenging.

Conclusion

The amount of innovative things you can do when you learn the details of the Android graphics pipeline is endless. I hope this article inspires you to try something new in any app you create. You just have to find something to inspire you.

You have the tools at your disposal. Now go out there and create something amazing.

Your descriptions and explanations of the Android graphics pipeline deserve a place in the Google documentation – they provide a vastly better and waaaay more accessible overview. Please accept my thanks for making sense of it all!

You are welcome, knowing it helped someone out there is the reason I do it :)